Are you on the lookout for a solution to the subject “keras history callback“? We reply all of your questions on the web site Ar.taphoamini.com in class: See more updated computer knowledge here. You will discover the reply proper under.

Keep Reading

Table of Contents

What is Keras callback historical past?

Access Model Training History in Keras

Keras offers the potential to register callbacks when coaching a deep studying mannequin. One of the default callbacks that’s registered when coaching all deep studying fashions is the History callback. It information coaching metrics for every epoch.

What is historical past historical past in Keras?

In the Keras docs, we discover: The History. historical past attribute is a dictionary recording coaching loss values and metrics values at successive epochs, in addition to validation loss values and validation metrics values (if relevant).

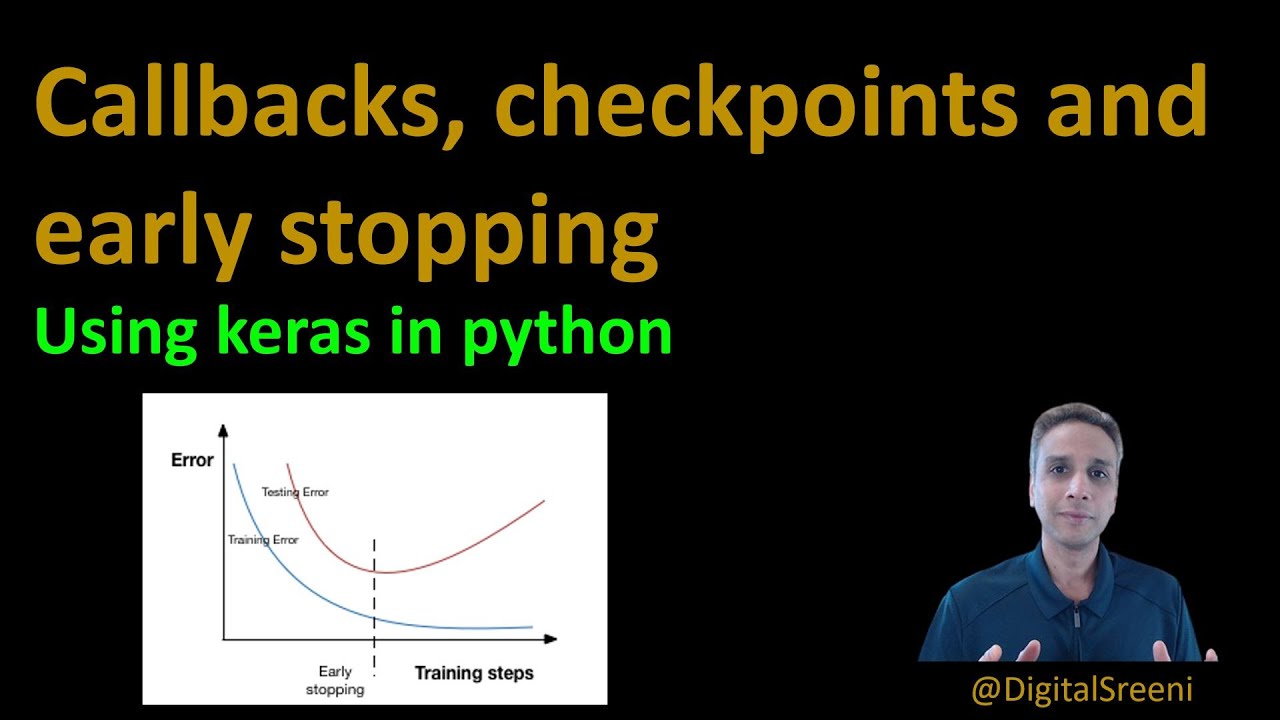

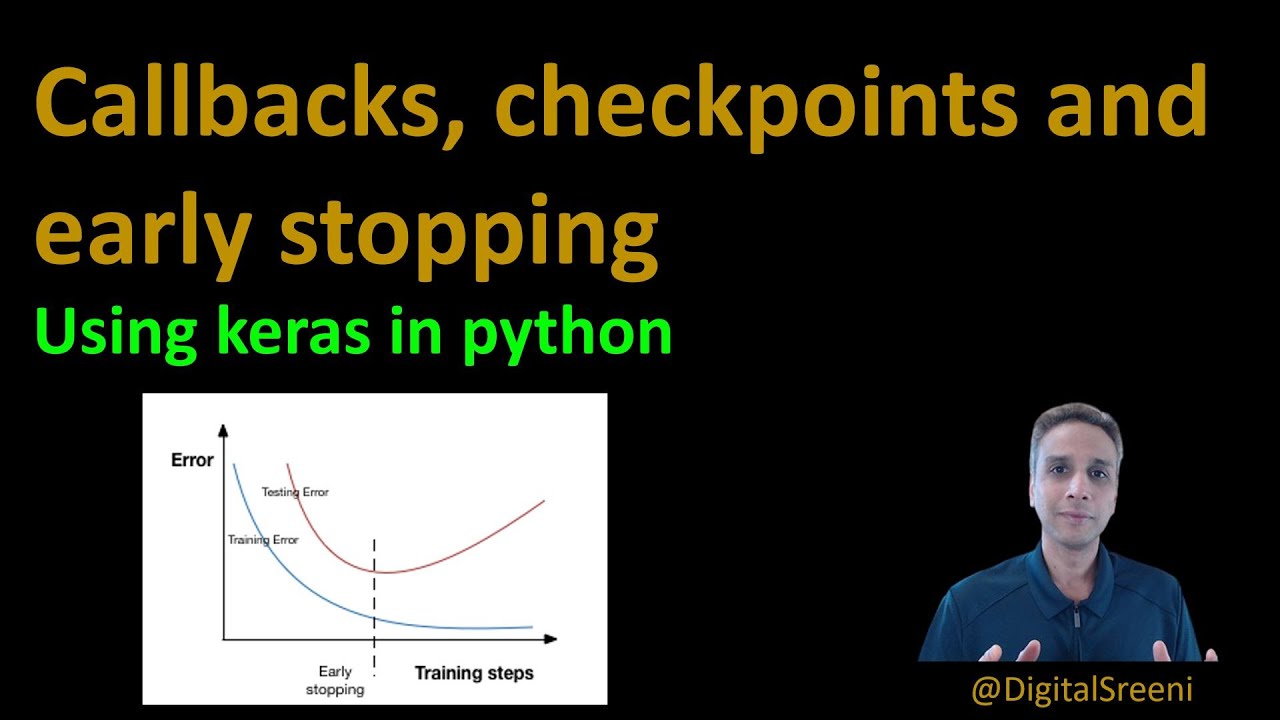

129 – What are Callbacks, Checkpoints and Early Stopping in deep studying (Keras and TensorFlow)

Images associated to the topic129 – What are Callbacks, Checkpoints and Early Stopping in deep studying (Keras and TensorFlow)

What is callback in Tensorflow?

What are Tensorflow Callbacks? Tensorflow callbacks are capabilities or blocks of code that are executed throughout a selected on the spot whereas coaching a Deep Learning Model. We all are conversant in the Training strategy of any Deep Learning mannequin.

What does mannequin match return?

By default Keras’ mannequin. match() returns a History callback object. This object retains observe of the accuracy, loss and different coaching metrics, for every epoch, within the reminiscence.

How many epochs do you have to practice for?

The proper variety of epochs relies on the inherent perplexity (or complexity) of your dataset. A superb rule of thumb is to start out with a price that’s 3 instances the variety of columns in your knowledge. If you discover that the mannequin remains to be enhancing in spite of everything epochs full, strive once more with a better worth.

How do you enhance validation accuracy?

One of the best methods to extend validation accuracy is to add extra knowledge. This is very helpful if you do not have many coaching cases. If you are engaged on picture recognition fashions, chances are you’ll contemplate growing the range of your out there dataset by using knowledge augmentation.

What is Val_loss in Keras?

The two losses (each loss and val_loss) are lowering and the tow acc (acc and val_acc) are growing. So this means the modeling is skilled in a great way. The val_acc is the measure of how good the predictions of your mannequin are.

See some extra particulars on the subject keras historical past callback right here:

tf.keras.callbacks.History | TensorFlow Core v2.9.0

tf.keras.callbacks.History(). This callback is routinely utilized to each Keras mannequin. The History object will get returned by the match methodology of fashions.

Python Examples of keras.callbacks.History – ProgramCreek …

The following are 9 code examples for exhibiting the best way to use keras.callbacks.History(). These examples are extracted from open supply initiatives.

tf.keras.callbacks.History | TensorFlow

Defined in tensorflow/python/keras/callbacks.py . Callback that information occasions right into a History object. This callback is routinely utilized to each Keras …

Callbacks API – Keras

You can use callbacks to: Write TensorBoard logs after each batch of coaching to observe your metrics; Periodically save your mannequin to disk; Do early stopping …

What is loss and accuracy in Keras?

Loss worth implies how poorly or properly a mannequin behaves after every iteration of optimization. An accuracy metric is used to measure the algorithm’s efficiency in an interpretable approach. The accuracy of a mannequin is normally decided after the mannequin parameters and is calculated within the type of a proportion.

What is verbose in neural community?

verbose is the selection that the way you need to see the output of your Nural Network whereas it is coaching. If you set verbose = 0, It will present nothing.

What are the varieties of callback?

There are two varieties of callbacks, differing in how they management knowledge circulate at runtime: blocking callbacks (also called synchronous callbacks or simply callbacks) and deferred callbacks (also called asynchronous callbacks).

How do you get a TensorBoard?

- Open up the command immediate (Windows) or terminal (Ubuntu/Mac)

- Go into the venture residence listing.

- If you’re utilizing Python virtuanenv, activate the digital surroundings you will have put in TensorFlow in.

- Make certain which you can see the TensorFlow library by means of Python.

How do I make a callback in Javascript?

A customized callback operate could be created by utilizing the callback key phrase because the final parameter. It can then be invoked by calling the callback() operate on the finish of the operate. The typeof operator is optionally used to examine if the argument handed is definitely a operate.

TensorFlow Tutorial 14 – Callbacks with Keras and Writing Custom Callbacks

Images associated to the topicTensorFlow Tutorial 14 – Callbacks with Keras and Writing Custom Callbacks

What is Validation_split in Keras?

validation_split: Float between 0 and 1. Fraction of the coaching knowledge for use as validation knowledge. The mannequin will set aside this fraction of the coaching knowledge, is not going to practice on it, and can consider the loss and any mannequin metrics on this knowledge on the finish of every epoch.

What is Categorical_crossentropy in Keras?

categorical_crossentropy: Used as a loss operate for multi-class classification mannequin the place there are two or extra output labels. The output label is assigned one-hot class encoding worth in type of 0s and 1. The output label, if current in integer kind, is transformed into categorical encoding utilizing keras.

What is epoch in Keras?

Epoch: an arbitrary cutoff, usually outlined as “one pass over the entire dataset”, used to separate coaching into distinct phases, which is beneficial for logging and periodic analysis. When utilizing validation_data or validation_split with the match methodology of Keras fashions, analysis can be run on the finish of each epoch.

Is 100 epochs an excessive amount of?

I bought greatest outcomes with a batch dimension of 32 and epochs = 100 whereas coaching a Sequential mannequin in Keras with 3 hidden layers. Generally batch dimension of 32 or 25 is sweet, with epochs = 100 until you will have massive dataset.

Is extra epochs all the time higher?

Assuming you observe the efficiency with a validation set, so long as validation error is lowering, extra epochs are useful, mannequin is enhancing on seen (coaching) and unseen (validation) knowledge.

Can you will have too many epochs?

Firstly, growing the variety of epochs will not essentially trigger overfitting, nevertheless it definitely can do. If the training fee and mannequin parameters are small, it might take many epochs to trigger measurable overfitting. That stated, it is not uncommon for extra coaching to take action.

How do I cease overfitting?

- Cross-validation. Cross-validation is a strong preventative measure in opposition to overfitting. …

- Train with extra knowledge. It will not work each time, however coaching with extra knowledge may help algorithms detect the sign higher. …

- Remove options. …

- Early stopping. …

- Regularization. …

- Ensembling.

How do you stabilize validation accuracy?

- Make certain that you’ll be able to over-fit your practice set.

- Make certain that you simply practice/take a look at units come from the identical distribution.

- Add drop out or regularization layers.

- shuffle your practice units whereas studying.

Why is there a dropout layer?

The Dropout layer randomly units enter models to 0 with a frequency of fee at every step throughout coaching time, which helps stop overfitting. Inputs not set to 0 are scaled up by 1/(1 – fee) such that the sum over all inputs is unchanged.

What is Val_loss and Val_acc?

The two losses (each loss and val_loss) are lowering and the tow acc (acc and val_acc) are growing. So this means the modeling is skilled in a great way. The val_acc is the measure of how good the predictions of your mannequin are.

Callback Functions When Training Neural Networks in Keras and TensorFlow

Images associated to the subjectCallback Functions When Training Neural Networks in Keras and TensorFlow

What is Adam Optimiser?

Adam is a substitute optimization algorithm for stochastic gradient descent for coaching deep studying fashions. Adam combines the most effective properties of the AdaGrad and RMSProp algorithms to offer an optimization algorithm that may deal with sparse gradients on noisy issues.

How do I repair overfitting neural community?

Data Augmentation

One of the most effective strategies for decreasing overfitting is to enhance the dimensions of the coaching dataset. As mentioned within the earlier approach, when the dimensions of the coaching knowledge is small, then the community tends to have better management over the coaching knowledge.

Related searches to keras historical past callback

- tensorflow historical past object

- save keras.callbacks.historical past

- keras historical past object documentation

- keras historical past object attributes

- keras historical past metrics

- tensorflow.python.keras.callbacks.historical past plot

- tf.keras.callbacks.callback instance

- keras historical past keys

- tf.keras.callbacks.historical past instance

- keras historical past callback instance

- keras historical past accuracy

- keras mannequin match historical past

- tf keras callbacks historical past instance

- keras callback save historical past

- tensorflow.python.keras.callbacks.historical past print

- keras.callbacks.historical past print

- keras save historical past callback

Information associated to the subject keras historical past callback

Here are the search outcomes of the thread keras historical past callback from Bing. You can learn extra if you need.

You have simply come throughout an article on the subject keras history callback. If you discovered this text helpful, please share it. Thank you very a lot.