Are you on the lookout for a solution to the subject “keras losses binary_crossentropy“? We reply all of your questions on the web site Ar.taphoamini.com in class: See more updated computer knowledge here. You will discover the reply proper beneath.

Keep Reading

Table of Contents

What is Binary_crossentropy loss?

binary_crossentropy: Used as a loss perform for binary classification mannequin. The binary_crossentropy perform computes the cross-entropy loss between true labels and predicted labels. categorical_crossentropy: Used as a loss perform for multi-class classification mannequin the place there are two or extra output labels.

How is keras loss calculated?

In deep studying, the loss is computed to get the gradients with respect to mannequin weights and replace these weights accordingly through backpropagation. Loss is calculated and the community is up to date after each iteration till mannequin updates do not convey any enchancment within the desired analysis metric.

Neural Networks Part 6: Cross Entropy

Images associated to the subjectNeural Networks Part 6: Cross Entropy

What is the loss in keras?

Loss: A scalar worth that we try to reduce throughout our coaching of the mannequin. The decrease the loss, the nearer our predictions are to the true labels. This is normally Mean Squared Error (MSE) as David Maust stated above, or usually in Keras, Categorical Cross Entropy.

What is binary cross-entropy loss in keras?

BinaryCrossentropy class

Computes the cross-entropy loss between true labels and predicted labels. Use this cross-entropy loss for binary (0 or 1) classification purposes. The loss perform requires the next inputs: y_true (true label): This is both 0 or 1.

What is the distinction between Categorical_crossentropy and Sparse_categorical_crossentropy?

categorical_crossentropy ( cce ) produces a one-hot array containing the possible match for every class, sparse_categorical_crossentropy ( scce ) produces a class index of the almost definitely matching class.

What is an effective cross-entropy loss?

One may marvel, what is an effective worth for cross entropy loss, how do I do know if my coaching loss is sweet or dangerous? Some intuitive pointers from MachineLearningMastery put up for pure log based mostly for a imply loss: Cross-Entropy = 0.00: Perfect chances. Cross-Entropy < 0.02: Great chances.

How many epochs do you have to prepare for?

The proper variety of epochs is determined by the inherent perplexity (or complexity) of your dataset. An excellent rule of thumb is to begin with a worth that’s 3 occasions the variety of columns in your knowledge. If you discover that the mannequin continues to be enhancing in spite of everything epochs full, attempt once more with the next worth.

See some extra particulars on the subject keras losses binary_crossentropy right here:

tf.keras.losses.binary_crossentropy – TensorFlow 2.3

keras.losses.binary_crossentropy. View supply on GitHub. Computes the binary crossentropy loss. View aliases. Main aliases.

Python tensorflow.keras.losses.binary_crossentropy() Examples

The following are 11 code examples for exhibiting use tensorflow.keras.losses.binary_crossentropy(). These examples are extracted from open supply …

keras/losses.py at grasp – GitHub

discount: Type of `tf.keras.losses.Reduction` to use to. loss. Default worth is `AUTO`. … Defaults to ‘binary_crossentropy’. “”” tremendous().__init__(.

Understand Keras binary_crossentropy() Loss – Keras Tutorial

In Keras, we are able to use keras.losses.binary_crossentropy() to compute loss worth. In this tutorial, we’ll focus on use this perform …

What is loss and Val loss in Keras?

The two losses (both loss and val_loss) are decreasing and the tow acc (acc and val_acc) are increasing. So this indicates the modeling is trained in a good way. The val_acc is the measure of how good the predictions of your model are.

How do you find the loss function?

Mean squared error (MSE) is the workhorse of basic loss functions; it’s easy to understand and implement and generally works pretty well. To calculate MSE, you take the difference between your predictions and the ground truth, square it, and average it out across the whole dataset.

How can we reduce loss in deep learning?

Try different activation functions, loss function, optimizer. Change layers number and units number. Change batch size. Add dropout layer.

What is loss in a neural network?

The loss function in a neural network quantifies the difference between the expected outcome and the outcome produced by the machine learning model. From the loss function, we can derive the gradients which are used to update the weights. The average over all losses constitutes the cost.

What is loss per epoch?

Actually, the loss of an epoch is usually defined as the average of the loss of batches in that epoch. So you can accumulate the loss values during an epoch and at the end divide it by the number of batches in the epoch: epoch_loss = [] for epoch in range(n_epochs): acc_loss = 0.

Loss or Cost Function | Deep Learning Tutorial 11 (Tensorflow Tutorial, Keras Python)

Images related to the topicLoss or Cost Function | Deep Learning Tutorial 11 (Tensorflow Tutorial, Keras Python)

Why do we use cross-entropy loss?

Cross-entropy loss is used when adjusting model weights during training. The aim is to minimize the loss, i.e, the smaller the loss the better the model.

Why is cross-entropy better than MSE?

1 Answer. Cross-entropy loss, or log loss, measure the performance of a classification model whose output is a probability value between 0 and 1. It is preferred for classification, while mean squared error (MSE) is one of the best choices for regression. This comes directly from the statement of your problems itself.

How do you calculate cross-entropy loss?

Cross-entropy can be calculated using the probabilities of the events from P and Q, as follows: H(P, Q) = — sum x in X P(x) * log(Q(x))

What is From_logits true?

from_logits = True signifies the values of the loss obtained by the model are not normalized and is basically used when we don’t have any softmax function in our model.

What does cross entropy do?

Cross-entropy measures the performance of a classification model based on the probability and error, where the more likely (or the bigger the probability) of something is, the lower the cross-entropy.

What is Sparse_categorical_accuracy?

sparse_categorical_accuracy checks to see if the maximal true value is equal to the index of the maximal predicted value. From Marcin’s answer above the categorical_accuracy corresponds to a one-hot encoded vector for y_true . Follow this answer to receive notifications.

Where is cross-entropy loss used?

Cross-entropy is commonly used in machine learning as a loss function. Cross-entropy is a measure from the field of information theory, building upon entropy and generally calculating the difference between two probability distributions.

Is log loss the same as cross-entropy?

They are essentially the same; usually, we use the term log loss for binary classification problems, and the more general cross-entropy (loss) for the general case of multi-class classification, but even this distinction is not consistent, and you’ll often find the terms used interchangeably as synonyms.

Can categorical cross-entropy be greater than 1?

Both the sparse categorical cross-entropy (SCE) and the categorical cross-entropy (CCE) can be greater than 1.

Is 100 epochs too much?

I got best results with a batch size of 32 and epochs = 100 while training a Sequential model in Keras with 3 hidden layers. Generally batch size of 32 or 25 is good, with epochs = 100 unless you have large dataset.

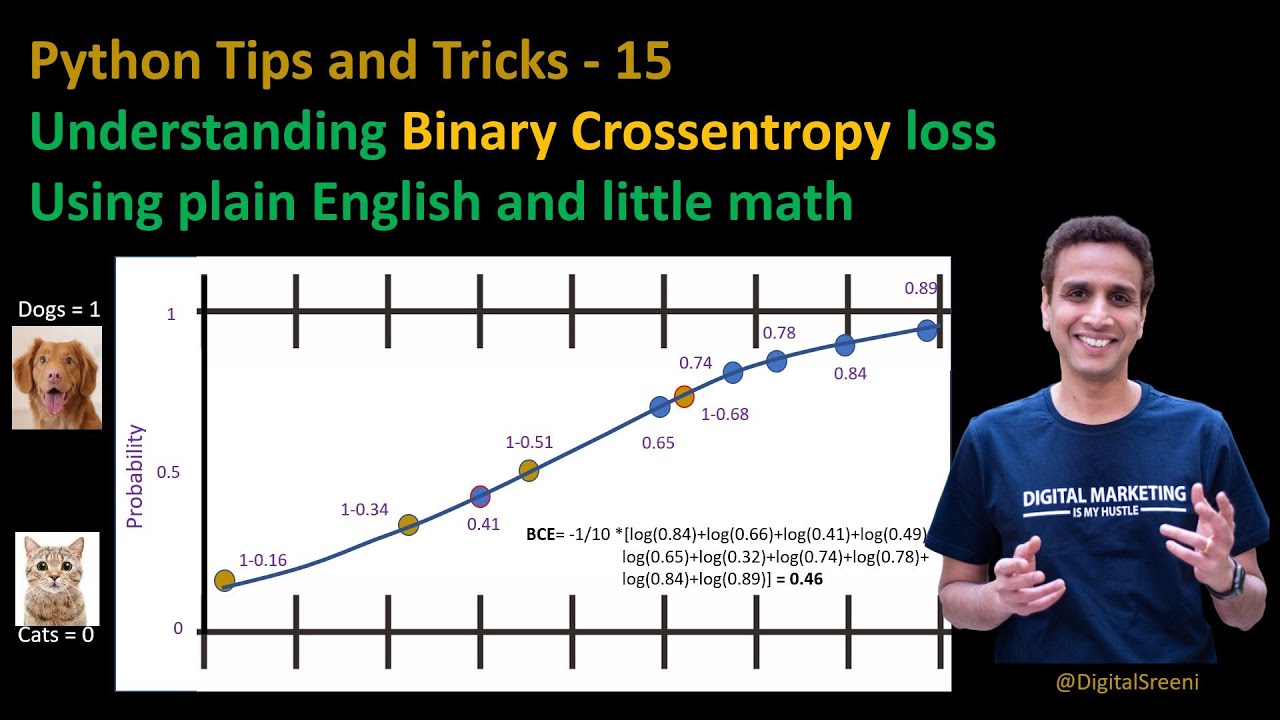

Tips Tricks 15 – Understanding Binary Cross-Entropy loss

Images related to the topicTips Tricks 15 – Understanding Binary Cross-Entropy loss

Do larger models need more epochs?

The number of epochs you require will depend on the size of your model and the variation in your dataset. The size of your model can be a rough proxy for the complexity that it is able to express (or learn).

How can I make my epochs faster?

- Start with a very small learning rate (around 1e-8) and increase the learning rate linearly.

- Plot the loss at each step of LR.

- Stop the learning rate finder when loss stops going down and starts increasing.

Related searches to keras losses binary_crossentropy

- tf.keras.losses.binary_crossentropy from_logits

- sparse categorical crossentropy

- categorical cross entropy keras

- model.compile loss

- keras custom loss

- tf.keras.losses.binary_crossentropy example

- binary crossentropy loss function

- keras losses binary cross entropy

- binary crossentropy vs categorical crossentropy

- sparse_categorical_crossentropy

- binary cross entropy loss range

- tf.keras.losses.binary_crossentropy source code

- model compile loss

- keras binary_crossentropy example

- attributeerror module ‘tensorflow.keras.losses’ has no attribute ‘binary_crossentropy’

- binary_crossentropy loss function

- keras binary crossentropy example

- tf.keras.losses.binary_crossentropy() 用法

- binary cross-entropy loss function

- keras.losses.binary_crossentropy code

- what is binary cross entropy loss in keras

- tensorflow loss functions

- binary cross entropy loss example

- tf.keras.losses.binary_crossentropy sample_weight

Information related to the topic keras losses binary_crossentropy

Here are the search results of the thread keras losses binary_crossentropy from Bing. You can read more if you want.

You have simply come throughout an article on the subject keras losses binary_crossentropy. If you discovered this text helpful, please share it. Thank you very a lot.