Are you searching for a solution to the subject “(*20*)”? We reply all of your questions on the web site Ar.taphoamini.com in class: See more updated computer knowledge here. You will discover the reply proper under.

Keep Reading

Table of Contents

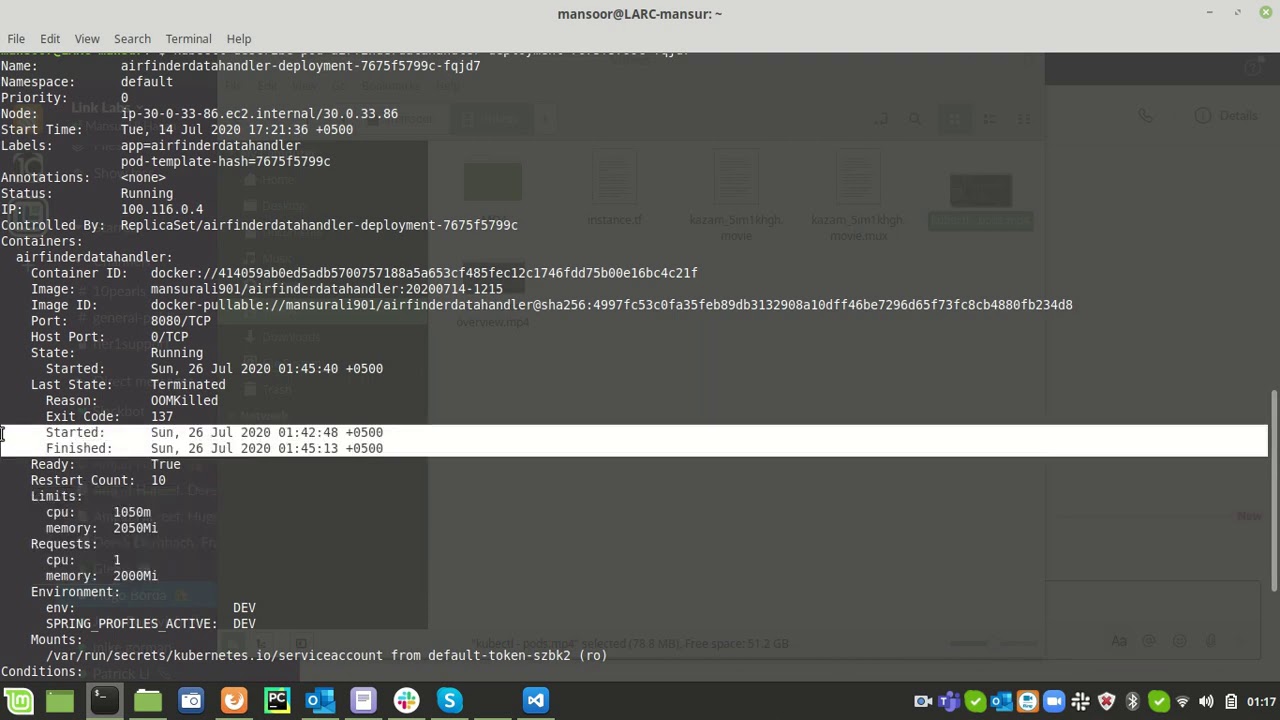

Why is POD in ContainerCreating state?

Pods Stuck in ContainerCreating State

ContainerCreating , for example, implies that kube-scheduler has assigned a employee node for the container and has instructed Docker daemon to kick-off the workload. However, be aware that the community is not provisioned at this stage — i.e, the workload doesn’t have an IP tackle.

How do I manually reset the pod in Kubernetes?

- You can use docker restart {container_id} to restart a container within the Docker course of, however there isn’t any restart command in Kubernetes. …

- Method 1 is a faster resolution, however the easiest technique to restart Kubernetes pods is utilizing the rollout restart command.

Kubernetes caught on ContainerCreating (5 Solutions!!)

Images associated to the subjectKubernetes caught on ContainerCreating (5 Solutions!!)

Why Kubernetes pod is just not prepared?

If a Pod is Running however not Ready it implies that the Readiness probe is failing. When the Readiness probe is failing, the Pod is not hooked up to the Service, and no site visitors is forwarded to that occasion.

What occurs when a Kubernetes pod fails?

If a Pod is scheduled to a node that then fails, the Pod is deleted; likewise, a Pod will not survive an eviction on account of an absence of assets or Node upkeep. Kubernetes makes use of a higher-level abstraction, referred to as a controller, that handles the work of managing the comparatively disposable Pod situations.

Is Createcontainererror ready to start out?

If the error is ready to start out : This implies that an object mounted by the container is lacking. Assuming you already checked for a lacking ConfigMap or Secret, there may very well be a storage quantity or different object required by the container.

What does CrashLoopBackOff imply?

CrashLoopBackOff is a standing message that signifies one in all your pods is in a relentless state of flux—a number of containers are failing and restarting repeatedly. This usually occurs as a result of every pod inherits a default restartPolicy of Always upon creation. Always-on implies every container that fails has to restart.

How do you manually reset the pod?

- $ minikube begin.

- $ kubectl get pods.

- $ contact deployment.YAML.

- $ kubectl create –f deployment.yaml.

- $ kubectl get pods.

- $ kubectl rollout restart deployment <deployment title>

See some extra particulars on the subject kubernetes pod caught in containercreating right here:

Troubleshooting in Kubernetes: A Strategic Guide – Better …

1. Pods Stuck in ContainerCreating State · Lack of IPs within the IPPool configured in your CNI · /var/lib/docker on the node filling up: This deters …

Pods are caught in “ContainerCreating” or “Terminating” standing …

Pods in a selected node are caught in ContainerCreating or Terminating standing;. In mission openshift-sdn , sdn and ovs pods are in …

Pods Stuck in ContainerCreating with Error: “no house left on …

The error could be noticed because of the filesystem mounted on listing /var/lib/docker shouldn’t have free disk house. Also, this difficulty may very well be noticed if the …

Pod Remains in ContainerCreating or Waiting | Tencent Cloud

If the worth of restrict is just too small, Sandbox will fail to run. This will trigger the Pod to stay within the ContainerCreating or Waiting standing. This …

Can we restart pod in Kubernetes?

As of kubernetes 1.15, you can now do a rolling restart of all pods for a deployment, so that you don’t take the service down.

How do you fix a CrashLoopBackOff Kubernetes pod?

- Configure and Recheck Your Files. A misconfigured or missing configuration file can cause the CrashLoopBackOff error, preventing the container from starting correctly. …

- Be Vigilant With Third-Party Services. …

- Check Your Environment Variables. …

- Check Kube-DNS. …

- Check File Locks.

Why is my pod stuck pending?

If a Pod is stuck in Pending it means that it can not be scheduled onto a node. Generally this is because there are insufficient resources of one type or another that prevent scheduling.

Why is my pod not working?

The device is faulty

Scroll down to troubleshoot that issue. If the device is charging, let it charge fully before you continue. After recharging the battery, put the pod back into the device and try vaping again. If your pod system still isn’t hitting, try a different pod.

How do I check if a Kubernetes pod is failing?

If you are using Cloud environment you can use Integrated with Cloud Logging tools (i.e. in Google Cloud Platform you can use Stackdriver ). In case you want to check logs to find reason why pod failed, it’s good described in K8s docs Debug Running Pods.

5 Simple Tips for Troubleshooting Your Kubernetes Pods

Images related to the topic5 Simple Tips for Troubleshooting Your Kubernetes Pods

What happens if Kubernetes master node fails?

After failing over one master node the Kubernetes cluster is still accessible. Even after one node failed, all the important components are up and running. The cluster is still accessible and you can create more pods, deployment services etc.

What happens when one of your Kubernetes nodes fails?

Irrespective of deployments (StatefuleSet or Deployment), Kubernetes will automatically evict the pod on the failed node and then try to recreate a new one with old volumes. If the node is back online within 5 – 6 minutes of the failure, Kubernetes will restart pods, unmount, and re-mount volumes.

What if a node goes down in Kubernetes?

If the crashed node recovers by itself or the user reboots the node, no additional actions are required to release its pods. The pods recover automatically after the node restores itself and joins the cluster. When a crashed node is recovered, the following occurs: The pod with the Unknown status is deleted.

How do you restart deployment in Kubernetes?

- Determine the deployment name by running the following command: kubectl -n namespace get deployment | grep CR_name. …

- Restart pods by running the appropriate kubectl commands, shown in Table 1. …

- Verify that all Management pods are ready by running the following command:

How do I get rid of pod Kubernetes?

The action of deleting the pod is simple. To delete the pod you have created, just run kubectl delete pod nginx . Be sure to confirm the name of the pod you want to delete before pressing Enter. If you have completed the task of deleting the pod successfully, pod nginx deleted will appear in the terminal.

How do I get POD details in Kubernetes?

- kubectl get – list resources.

- kubectl describe – show detailed information about a resource.

- kubectl logs – print the logs from a container in a pod.

- kubectl exec – execute a command on a container in a pod.

How do I delete CrashLoopBackOff pod?

type=OnDelete . In case of node failure, the pod will recreated on new node after few time, the old pod will be removed after full recovery of broken node. worth noting it is not going to happen if your pod was created by DaemonSet or StatefulSet .

What is the most common reason for a pod to report CrashLoopBackOff as its state?

What is the most common reason for a Pod to report CrashLoopBackOff as its state? 1) The GCP project’s configuration is not correct.

How do I resolve back a restarting failed container?

If you receive the “Back-Off restarting failed container” output message, then your container probably exited soon after Kubernetes started the container. If the Liveness probe isn’t returning a successful status, then verify that the Liveness probe is configured correctly for the application.

How do you restart a Kube System pod?

- Log in to the central or regional microservices VM through SSH.

- Run the following command to view the status of the kube-system pod: root@host:~/# kubectl get pods –namespace=kube-system. …

- Run the following command to restart kube-proxy. root@host:~/# Kubectl apply –f /etc/kubernetes/manifests/kube-proxy.yaml.

Kubernetes Pods troubleshooting

Images related to the topicKubernetes Pods troubleshooting

How do you restart all pods in namespace?

- kubectl -n {NAMESPACE} rollout restart deploy.

- deploys=`kubectl -n $1 get deployments | tail -n +2 | cut -d ‘ ‘ -f 1` for deploy in $deploys; do kubectl -n $1 rollout restart deployments/$deploy done.

- ./kubebounce.sh {NAMESPACE}

What is rolling restart in Kubernetes?

Rolling restart is utilized to resume every pod after deployment. For a rolling restart, we run the following command: After running the command mentioned above, Kubernetes shuts down slowly and substitutes pods, but some containers are always running.

Related searches to kubernetes pod stuck in containercreating

- kubernetes remove pod from service

- containercreating in kubernetes

- get containers in pod kubernetes

- pod stuck in terminating state kubernetes

- how to shell into kubernetes pod

- container in pod is waiting to start containercreating

- definition of pod in kubernetes

- kubectl delete pod

- kubernetes restart pod

- failed to create pod sandbox: rpc error: code = unknown desc = failed to set up sandbox container

- failedcreatepodsandbox

- how to go inside a kubernetes pod

- pods stuck in terminating kubernetes

- failed to create pod sandbox rpc error code unknown desc failed to set up sandbox container

- pod stuck at pulling image

- how to delete pod in kubernetes command

- networkplugin cni failed to set up pod

Information related to the topic kubernetes pod stuck in containercreating

Here are the search results of the thread kubernetes pod stuck in containercreating from Bing. You can read more if you want.

You have simply come throughout an article on the subject (*20*). If you discovered this text helpful, please share it. Thank you very a lot.